These days, the way we do SEO is somewhat different from how things were done ca. 10 years ago. There’s one important reason for that: search engines have been continuously improving their algorithms to give searchers the best possible results. Over the last decade, Google, as the leading search engine, introduced several major updates, and each of them has had a major impact on best practices for SEO. Here’s a — by no means exhaustive — list of Google’s important algorithm updates so far, as well as some of their implications for search and SEO.

2011 – Panda

Obviously, Google was around long before 2011. We’re starting with the Panda update because it was the first major update in the ‘modern SEO’ era. Google’s Panda update tried to deal with websites that were purely created to rank in the search engines, and mostly focused on on-page factors. In other words, it determined whether a website genuinely offered information about the search term visitors used.

Two types of sites were hit especially hard by the Panda update:

- Affiliate sites (sites which mainly exist to link to other pages).

- Sites with very thin content.

Google periodically re-ran the Panda algorithm after its first release, and included it in the core algorithm in 2016. The Panda update has permanently affected how we do SEO, as site owners could no longer get away with building a site full of low-quality pages.

2012 – Venice

Venice was a noteworthy update, as it showed that Google understood that searchers are sometimes looking for results that are local to them. After Venice, Google’s search results included pages based on the location you set, or your IP address.

2012 – Penguin

Google’s Penguin update looked at the links websites got from other sites. It analyzed whether backlinks to a site were genuine, or if they’d been bought to trick the search engines. In the past, lots of people paid for links as a shortcut to boosting their rankings. Google’s Penguin update tried to discourage buying, exchanging or otherwise artificially creating links. If it found artificial links, Google assigned a negative value to the site concerned, rather than the positive link value it would have previously received. The Penguin update ran several times since it first appeared and Google added it to the core algorithm in 2016.

As you can imagine, websites with a lot of artificial links were hit hard by this update. They disappeared from the search results, as the low-quality links suddenly had a negative, rather than positive impact on their rankings. Penguin has permanently changed link building: it no longer suffices to get low-effort, paid backlinks. Instead, you have to work on building a successful link building strategy to get relevant links from valued sources.

2012 – Pirate

The Pirate update was introduced to combat illegal spreading of copyrighted content. It considered (many) DMCA (Digital Millennium Copyright Act) takedown requests for a website as a negative ranking factor for the first time.

2013 – Hummingbird

The Hummingbird update saw Google lay down the groundwork for voice-search, which was (and still is) becoming more and more important as more devices (Google Home, Alexa) use it. Hummingbird pays more attention to each word in a query, ensuring that the whole search phrase is taken into account, rather than just particular words. Why? To understand a user’s query better and to be able to give them the answer, instead of just a list of results.

The impact of the Hummingbird update wasn’t immediately clear, as it wasn’t directly intended to punish bad practice. In the end, it mostly enforced the view that SEO copy should be readable, use natural language, and shouldn’t be over-optimized for the same few words, but use synonyms instead.

2014 – Pigeon

Another bird-related Google update followed in 2014 with Google Pigeon, which focused on local SEO. The Pigeon update affected both the results pages and Google Maps. It led to more accurate localization, giving preference to results near the user’s location. It also aimed to make local results more relevant and higher quality, taking organic ranking factors into account.

2014 – HTTPS/SSL

To underline the importance of security, Google decided to give a small ranking boost to sites that correctly implemented HTTPS to make the connection between website and user secure. At the time, HTTPS was introduced as a lightweight ranking signal. But Google had already hinted at the possibility of making encryption more important, once webmasters had had the time to implement it.

2015 – Mobile Update

This update was dubbed ‘Mobilegeddon’ by the SEO industry as it was thought that it would totally shake up the search results. By 2015 more than 50% of Google’s search queries were already coming from mobile devices, which probably led to this update. The Mobile Update gave mobile-friendly sites a ranking advantage in Google’s mobile search results. In spite of its dramatic nickname, the mobile update didn’t instantly mess up most people’s rankings. Nevertheless, it was an important shift that heralded the ever-increasing importance of mobile.

2015 – RankBrain

RankBrain is a state-of-the-art Google algorithm, employing machine learning to handle queries. It can make guesses about words it doesn’t know, to find words with similar meanings and then offer relevant results. The RankBrain algorithm analyzed past searches, determining the best result, in order to improve.

Its release marks another big step for Google to better decipher the meaning behind searches, and serve the best-matching results. In March 2016, Google revealed that RankBrain was one of the three most important of its ranking signals. Unlike other ranking factors, you can’t really optimize for RankBrain in the traditional sense, other than by writing quality content. Nevertheless, its impact on the results pages is undeniable.

2016 – Possum

In September 2016 it was time for another local update. The Possum update applied several changes to Google’s local ranking filter to further improve local search. After Possum, local results became more varied, depending more on the physical location of the searcher and the phrasing of the query. Some businesses which had not been doing well in organic search found it easier to rank locally after this update. This indicated that this update made local search more independent of the organic results.

Read more: Near me searches: Is that a Possum near me? »

2018 – (Mobile) Speed Update

Acknowledging users’ need for fast delivery of information, Google implemented this update that made page speed a ranking factor for mobile searches, as was already the case for desktop searches. The update mostly affected sites with a particularly slow mobile version.

2018 – Medic

This broad core algorithm update caused quite a stir for those affected, leading to some shifts in ranking. While a relatively high number of medical sites were hit with lower rankings, the update wasn’t solely aimed at them and it’s unclear what its exact purpose was. It may have been an attempt to better match results to searchers’ intent, or perhaps it aimed to protect users’ wellbeing from (what Google decided was) disreputable information.

Keep reading: Google’s Medic update »

2019 – BERT

Google’s BERT update was announced as the “biggest change of the last five years”, one that would “impact one in ten searches.” It’s a machine learning algorithm, a neural network-based technique for natural language processing (NLP). The name BERT is short for: Bidirectional Encoder Representations from Transformers.

BERT can figure out the full context of a word by looking at the words that come before and after it. In other words, it uses the context and relations of all the words in a sentence, rather than one-by-one in order. This means: a big improvement in interpreting a search query and the intent behind it.

Read on: Google BERT: A better understanding of complex queries »

Expectations for future Google updates

As you can see, Google has become increasingly advanced since the early 2010s. Its early major updates in the decade focused on battling spammy results and sites trying to cheat the system. But as time progressed, updates contributed more and more to search results catered to giving desktop, mobile and local searchers exactly what they’re looking for. While the algorithm was advanced to begin with, the additions over the years, including machine learning and NLP, make it absolutely state of the art.

With the recent focus on intent, it seems likely that Google Search will continue to focus its algorithm on perfecting its interpretation of search queries and styling the results pages accordingly. That seems to be their current focus working towards their mission “to organize the world’s information and make it universally accessible and useful.” But whatever direction it takes, being the best result and working on having an excellent site will always be the way to go!

Keep on reading: Should I follow every change Google makes? »

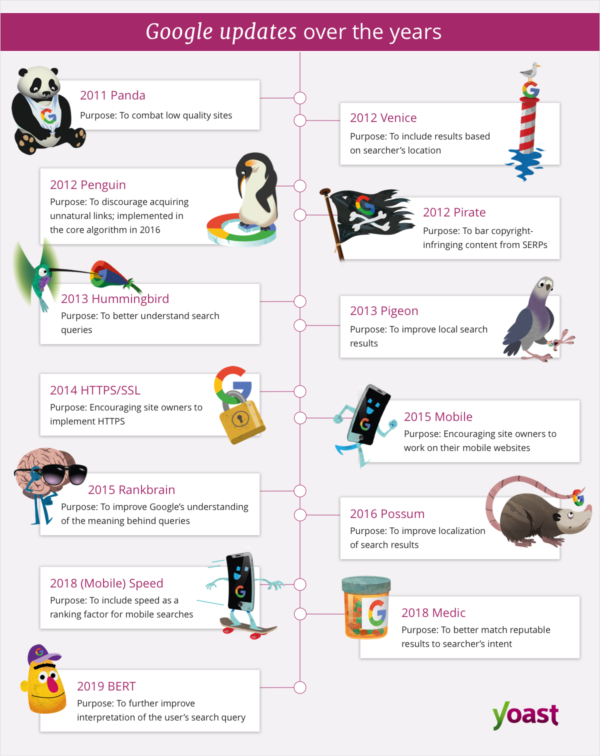

Feeling a bit overwhelmed by all the different names and years? Don’t worry! We made a handy infographic that shows when each Google update happened and briefly describes what the purpose was.

The post A brief history of Google’s algorithm updates appeared first on Yoast.